Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

This is a page not in th emain menu

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

B+ Tree data structure for generic types. Supports all features of Standard Template Library (STL) container.

Developed a Customer Feedback Analysis Model to analyze the customer review data of a company, and help identify critical issues based on their severity and impact on customers as well as management.

Trained an agent to play the game of Enduro using Imitation Learning on the OpenAI gym environment.

Performed Semantic Segmentation on the Indian Driving Dataset using custom trained DeepLabV3+ network. Worked on low light enhancement techniques to improve the model’s accuracy.

Awarded Best Completed Submission winner in the Intel - PESU Student Contest.

Developed a Custom EfficientNet model to detect secret data hidden within images. Won a Silver medal for being the Top 3% of all teams. Ranked 32/1095 teams

Published in 10th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2021), 1900

In this paper, we analyze how well capsule networks adapt to new domains by experimenting with multiple routing algorithms and comparing it with CNNs.

Capsule Networks are an exciting deep learning architecture which overcomes some of the shortcomings of Convolutional Neural Networks (CNNs). Capsule networks aim to capture spatial relationships between parts of an object and exhibits viewpoint invariance. In practical computer vision, the training data distribution is different from the test distribution and the covariate shift affects the performance of the model. This problem is called Domain Shift. In this paper, we analyze how well capsule networks adapt to new domains by experimenting with multiple routing algorithms and comparing it with CNNs.

Our hypothesis is that capsule networks will have a smaller domain shift as compared to CNNs. The motivation behind this hypothesis is that since capsule networks claim to capture the spatial relationship between parts of an object, the network should be less susceptible to domain shift when compared to CNNs. We analyse three different routing techniques namely Dynamic Routing, EM Routing and Self Routing and evaluate their effect on domain shift.

We evaluate the performance of three different source-target pairs. We train a model on source evaluate the performance on target. Both the source and target dataset contain the same classes but are from different distribution. The analysis is done using SVHN-MNIST, MNIST-MNISTM and CIFAR-10 to STL-10.

We observe that EM-routing performs well amongst all routing techniques, and most of the time performing better than CNNs in terms of minimizing domain shift.

In this paper, we have carried out a comprehensive analysis of Domain Shift in Capsule Networks by considering different routing algorithms. Using a Capsule network with different routing techniques, we examined how well these models adapt to new domains. Further work can be done to use Capsule networks for domain adaptation and domain generalization.

Download here

Published in PMLR: NeurIPS 2020 Competition and Demonstration Track, 2020 , 1900

The competition concept, protocols, and solutions of the top-three teams at the competition are described in full in this paper. Please refer to Section 3.2 and Appendix B of the paper for more details regarding our proposed solution.

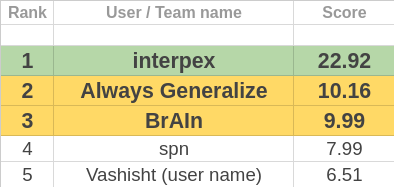

Published in 1st Runner Up in Predicting Generalization in Deep Learning, NeurIPS 2020 Competition Track, 1900

In this work, we developed a simple yet effective method to predict the generalization performance of a model by using the concept that models that are robust to augmentations are more generalizable than those which are not.

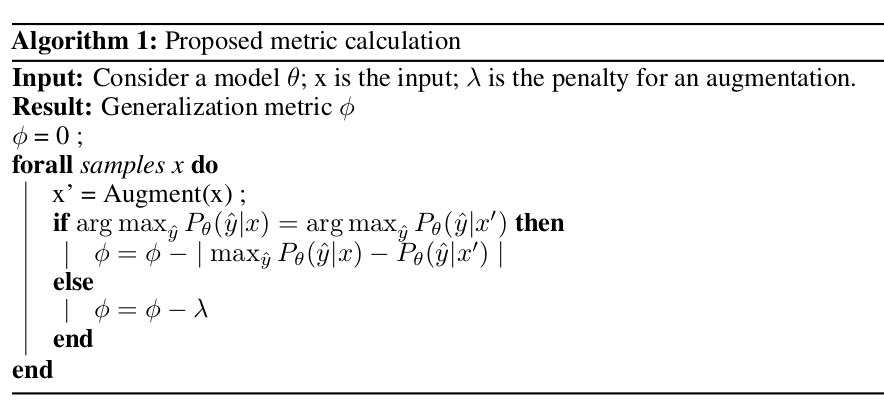

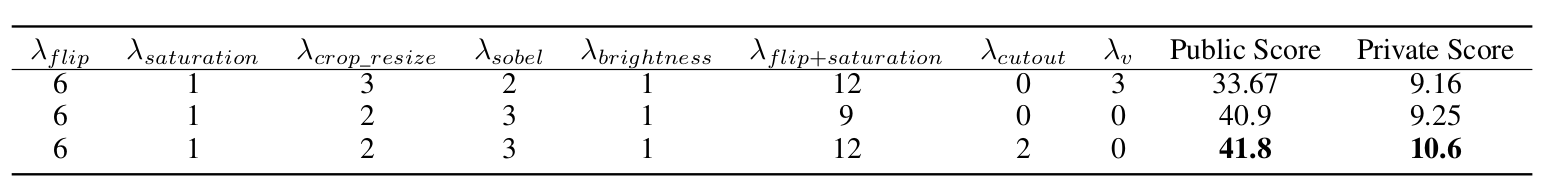

Generalization is the ability of a model to predict on unseen domains and is a fundamental task in machine learning. Several generalization bounds, both theoretical and empirical have been proposed but they do not provide tight bounds. In this work, we propose a simple yet effective method to predict the generalization performance of a model by using the concept that models that are robust to augmentations are more generalizable than those which are not. We experiment with several augmentations and composition of augmentations to check the generalization capacity of a model. We also provide a detailed motivation behind the proposed method. The proposed generalization metric is calculated based on the change in the model’s output after augmenting the input. The proposed method was the first runner up solution for the competition “Predicting Generalization in Deep Learning”.

The generalization gap of a model is defined as the difference between the estimated risk of a target function and the empirical risk of a target function.

The task of the competition was to predict the generalization of a model through a complexity measure that maps the model and dataset to a real number. This real number indicates the generalization ability of the model. The models are ranked based on the consistency with the actual generalization performance.

More details about the competition can be found in the Competition website.

Details of the tasks and evaluation metric can be found in this paper.

The proposed method is based on a simple hypothesis that a model capable of generalizing must be robust to augmentations. A model’s output should not change significantly when certain augmentations are performed on the input. The model should confidently predict the augmented input if it has learned the correct features of a particular class.

For every sample, we augment the input and then compare the class prediction of the model for the original input and augmented input. If the class prediction is the same even after the input is augmented, then we add a penalty equal to the difference between probabilities of the predicted class on the original and augmented input.

The metric used in the competition was Conditional Mutual Information as described in this paper.

Higher the score, the better the generalization metric.

This method tests the model with augmented samples from the training data and penalizes models that are unable to correctly classify augmented samples. We have also provided an insight into the role of augmentations in testing the generalizability of a model.

Download here

Published in ICCV 2021, 1900

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.